July 10, 2024

Human reliability often forms the benchmark against which AI stability is measured. But how do they compare in real-world scenarios?

When considering task accuracy, humans have a nuanced understanding of context that AI lacks. Their ability to adapt to diverse and unexpected situations is unparalleled, driven by human intuition and experience. However, humans are prone to errors, especially over extended periods or under stress, affecting overall reliability.

AI systems, on the other hand, excel in consistency and performance over repetitive tasks. Unlike humans, AI does not tire or get distracted, resulting in fewer errors in predefined tasks. Despite this, AI can still struggle with contextual understanding and nuanced decision-making that comes naturally to humans.

A classic example is customer service chatbots versus human representatives. AI chatbots handle a large volume of queries with remarkable speed and accuracy but fall short in addressing complex or emotionally charged issues. Human representatives, while slower and sometimes less accurate, offer empathy and complex problem-solving skills that AI currently lacks.

Balancing human intuition with AI consistency can pave the way for optimal reliability.

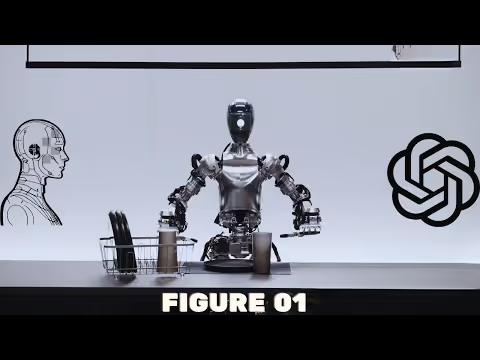

Imagine a home where robots perform household chores, including washing and putting away dishes, promising a future of seamless efficiency and convenience.

In such a scenario, reliability becomes paramount.

To understand the dynamics better, consider a robot designed for dishwashing, handling a high volume of dish interactions daily.

Suppose this robot aims to perform with 99.9% reliability.

This figure translates to breaking a dish once every ten days, an unacceptable rate for most consumers.

Clearly, achieving human-like reliability demands far greater precision and robustness from AI systems, highlighting the challenges and ambitions driving AI stability.

AI's increasing role in everyday tasks, a beacon of technological advancement, boasts vast potential. However, ensuring that these systems operate at levels matching human reliability is paramount, calling for meticulous attention to algorithmic precision and error reduction.

To achieve this stringent level of dependability, developers must prioritize "five nines" reliability. This is not merely a technical challenge but an engineering marvel requiring continuous innovation. As AI becomes more integrated into dynamic environments, the journey toward flawless reliability will necessitate rigorous testing, iterative improvements, and an unwavering commitment to excellence.

Achieving a 99.9% reliability threshold is a significant milestone for AI systems, especially in dynamic environments.

This level of reliability requires dedicated effort and strategic planning.

Achieving it not only boosts user trust but also paves the way for broader AI adoption.

By prioritizing these tactics, engineers can move closer to making reliable AI an everyday reality.

Reaching a reliability rate of 99.9997% is an ambitious, yet attainable goal that excites innovators and optimists alike.

The journey towards this milestone entails rigorous methodologies and strategic implementation.

Maintaining a relentless focus on reliability is paramount, especially as AI progresses into critical functions.

Developers must embrace multifaceted approaches, including exhaustive testing, rigorous checks, and balanced optimization.

These mechanisms are not just about avoiding errors but are fundamental to establishing sustained, dependable AI performance that meets high user expectations.

Ultimately, the pathway to achieving this pinnacle of reliability is paved with diligent effort and an unwavering commitment to excellence.

Achieving AI stability encompasses a myriad of challenges that require strategic foresight and unwavering commitment. From handling dynamic environments to ensuring seamless human-robot interactions, the quest for reliable AI functionality is both intricate and daunting.

These challenges amplify as AI systems become more integrated into everyday applications, necessitating continual advancements and innovative solutions.

Navigating dynamic environments presents unique challenges for AI stability, particularly in robotics and automation.

Success in dynamic environments hinges on continuous learning and adaptation. AI's ability to remain reliable amidst change is crucial.

Investments in robust algorithms and adaptive technologies are essential to handle the complexities of dynamic environments consistently and effectively.

AI must navigate unpredictable scenarios effectively.

In real-world applications, AI encounters countless challenges. The environments in which these systems operate are often subject to rapid change, presenting unanticipated obstacles that require immediate response. Consequently, AI must not only perform well under ideal conditions but also demonstrate resilience in unpredictable contexts.

Such adaptability is crucial for AI reliability.

Consider emergency response robots, for instance - they must traverse debris-strewn landscapes, navigate collapsing structures, and make critical decisions in a matter of seconds - all while maintaining functionality and accuracy.

Therefore, consistent advancements in algorithmic development and system robustness are imperative. Achieving this goal demands significant investment in research and development, which underpins AI's potential to thrive in diverse, unpredictable scenarios, bolstering its application in real-world settings.

Self-driving cars symbolize the forefront of AI stability and innovation. Whether navigating through bustling city streets or cruising on highways, these autonomous vehicles illustrate the immense potential and challenges of achieving reliable AI performance in dynamic environments.

Much like the complex algorithms required for emergency response robots, self-driving cars depend on advanced sensory inputs and real-time data analysis to maneuver safely. They must handle unpredictable pedestrians, erratic drivers, and variable weather conditions, all while maintaining stringent safety standards. Hence, robustness and precision are paramount to deploying self-driving technology universally.

Nevertheless, the journey towards fully autonomous vehicles is ongoing. While significant strides have been made, incidents like the temporary removal of Cruise vehicles from roads indicate that achieving near-perfect reliability in self-driving technology is a formidable task. This mirrors the high expectations and stringent reliability standards required for AI applications in other sectors as well.

Consequently, AI stability in self-driving cars is a testament to the broader challenges and triumphs within the field of AI. As researchers continue to refine these systems, the lessons learned will undoubtedly translate into improvements across various AI applications, further solidifying the foundation for a future where autonomous technology seamlessly integrates into daily life.

AI stability, particularly in software applications, is critical to ensuring seamless user experiences and high dependability.

For instance, in cloud-based systems, maintaining AI stability means preventing downtimes and ensuring constant availability. These systems must handle vast amounts of data, which requires robust error-handling and failover mechanisms to achieve near-perfect uptime.

Terms like “fail-safe” and “robustness” underscore the importance of reliability in software-driven environments.

Large Language Models (LLMs) represent a significant leap forward in the realm of artificial intelligence, illustrating the potential for groundbreaking innovations across the spectrum of AI applications.

The underlying architecture of LLMs is sophisticated, involving billions of parameters.

These models have been meticulously trained on diverse datasets, enabling them to understand and generate human-like text.

Impressively, LLMs have demonstrated capabilities that extend beyond mere text completion, showcasing levels of comprehension and contextual awareness.

Their applications span from automating customer service interactions to enhancing machine translation services, gradually redefining user expectations in these areas.

Nevertheless, the path to perfection is intricate and ongoing. Ensuring robustness in LLMs involves continuous training and refinement to mitigate errors and biases.

Classification problems are fundamental to many AI applications, spanning various industries and use cases.

These problems rely on training algorithms with labeled data to categorize new, unseen instances accurately.

As AI improves, the accuracy of classification tasks continues to enhance, driving advancements in multiple domains.

Spam filters exemplify the everyday application of AI, showcasing its ability to handle massive data efficiently. These filters work tirelessly, sorting through millions of emails to ensure users receive pertinent communications.

Despite occasional missteps, spam filters achieve remarkable accuracy, often surpassing our expectations. The system operates with an excellence that, while occasionally flawed, remains impressively competent.

Notably, spam detection algorithms distinguish legitimate emails from spam with great precision. This is accomplished through machine learning techniques that continuously evolve.

Moreover, spam filters employ natural language processing and pattern recognition to maintain their effectiveness. They examine email characteristics, such as sender reputation and content, to make informed decisions.

While criticism exists regarding occasional misclassifications, it is essential to recognize the magnitude of their task. These systems manage extensive volumes of emails with accuracy that far exceeds human capacity when scaled.

In essence, spam filters demonstrate AI’s potential in everyday technology. Their evolution showcases the continuous improvement and increased reliability of AI applications.

AI stability remains a compelling pursuit.

As technology advances, the scope and impact of AI systems will expand. The primary focus for the future entails achieving unprecedented levels of reliability in dynamic environments, similar to those found in households and workplaces. Consequently, the implementation of real-world applications will necessitate sophisticated algorithms and enhanced machine learning.

AI stability must match this complexity.

Achieving this degree of reliability requires extensive testing and refinements — a rigorous process encompassing trial and error phases to fine-tune machine performance. Moreover, potential missteps should be anticipated and mitigated to ensure seamless integration.

The future development of AI stability depends on cooperative efforts between researchers, industry leaders, and policymakers. By investing in next-generation AI technologies and fostering innovation, they can accelerate progress towards achieving reliability that aligns with our elevated expectations. Through collaboration, AI stability will reach new pinnacles, making transformative technologies a definitive aspect of daily life.

Understanding AI stability is crucial for the deployment of advanced technologies in everyday environments.

From humanoid robots to spam filters, the reliability of AI systems has a direct impact on user experience and trust.

Achieving high levels of AI stability involves continuous refinements and testing to navigate dynamic settings effectively and safely.

Efforts from researchers, industry leaders, and policymakers are essential to meet the high expectations placed on AI technology in real-world applications.

As these collective efforts advance, AI stability will become more robust, shaping a future where AI seamlessly integrates into daily life.